Unified MCP Server

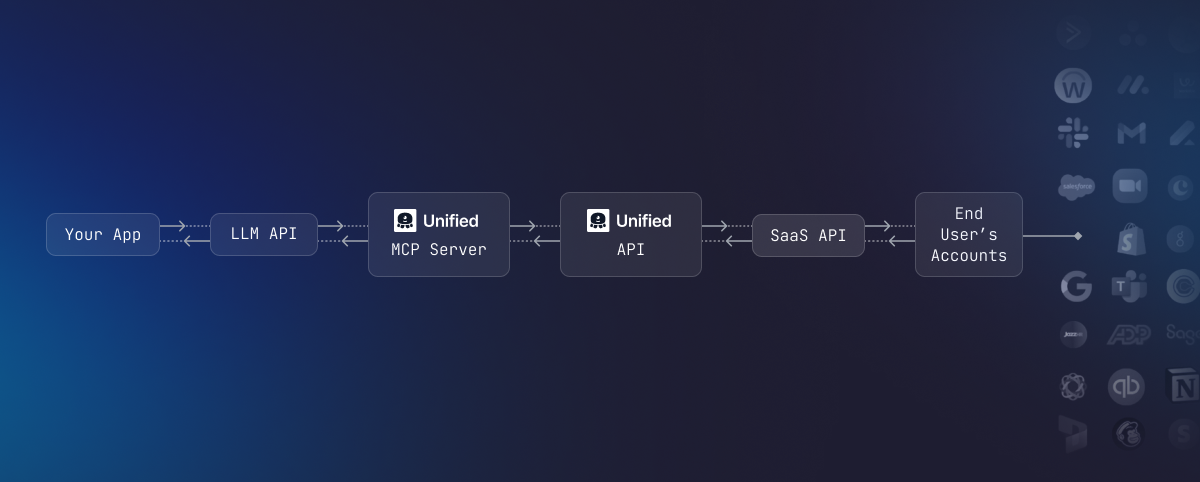

Unified has launched an MCP server that connects any Unified connection to LLM (Large Language Model) providers supporting the newest MCP protocols. The available MCP tools will be determined by the integration’s feature support and the connection’s requested permissions.

The Unified MCP server sits on top of our Unified API (which calls individual APIs), and hides all of the complexity of API calling. Each call to a tool will count as 1 API request on your plan.

Warning: Most current LLM models have severe limits on how many tools they can handle. Groq's models can only handle 10 tools, while most of OpenAI's models can handle only 20 available tools. Cohere's recent models seem to work with 50 models. Make sure that you limit the tools with the permissions or tools parameters.

Check out our sample code (in nodeJS Typscript) at unified-mcp-typescript

URLs

- Streamable HTTP: https://mcp-api.unified.to/mcp or https://mcp-api-eu.unified.to/mcp

- SSE: https://mcp-api.unified.to/sse or https://mcp-api-eu.unified.to/sse (SSE has been deprecated in the MCP protocol)

- stdin:

for real? it's 2025...